kubernetes上部署rook

标签:

[TOC] 1. 简单说说为什么用rookrook这里就不作详细介绍了,具体可以到官网查看。

说说为什么要在kubernetes上使用rook部署ceph集群。

众所周知,当前kubernetes为当前最佳云原生容器平台,随着pod在kubernetes节点内被释放,其容器数据也会被清除,即没有持久化存储数据能力。而ceph作为最好的开源存储之一,也是结合kubernetes最好的存储之一。利用kubernetes的调度功能,rook的自我扩展和自我修复能力,相互紧密配合。

系统 CentOS7.6 一块200G数据盘

kubernetes v1.14.8-aliyun.1 有挂载数据盘的节点调度为osd节点

rook v1.1.4 -

ceph v14.2.4 -

注:

OSD至少3个节点,直接使用裸盘而不使用分区或者文件系统的方式性能最好。

这里我们使用helm方式,helm的优势不必多说。

参考文档:

https://rook.io/docs/rook/v1.1/helm-operator.html

注:

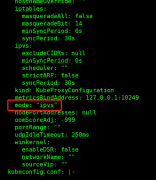

根据kubernetes版本支持,可将values.yaml中设置enableFlexDriver: true;

部署结果:

[[email protected] rook-ceph]# kubectl get pod -n rook-ceph NAME READY STATUS RESTARTS AGE rook-ceph-operator-5bd7d67784-k9bq9 1/1 Running 0 2d15h rook-discover-2f84s 1/1 Running 0 2d14h rook-discover-j9xjk 1/1 Running 0 2d14h rook-discover-nvnwn 1/1 Running 0 2d14h rook-discover-nx4qf 1/1 Running 0 2d14h rook-discover-wm6wp 1/1 Running 0 2d14h 2.3 Ceph集群创建 2.3.1 标识osd节点为了更好的管理控制osd,标识指定节点,便于pod只在这些节点调度。

kubectl label node node1 ceph-role=osd 2.3.2 yaml创建Ceph集群vim rook-ceph-cluster.yaml

apiVersion: ceph.rook.io/v1 kind: CephCluster metadata: name: rook-ceph namespace: rook-ceph spec: cephVersion: image: ceph/ceph:v14.2.4-20190917 # 节点ceph目录,包含配置和log dataDirHostPath: /var/lib/rook mon: # Set the number of mons to be started. The number should be odd and between 1 and 9. # If not specified the default is set to 3 and allowMultiplePerNode is also set to true. count: 3 # Enable (true) or disable (false) the placement of multiple mons on one node. Default is false. allowMultiplePerNode: false mgr: modules: - name: pg_autoscaler enabled: true network: # osd和mgr会使用主机网络,但是mon还是使用k8s网络,因此仍不能解决k8s外部连接问题 # hostNetwork: true dashboard: enabled: true # cluster level storage configuration and selection storage: useAllNodes: false useAllDevices: false deviceFilter: location: config: metadataDevice: #databaseSizeMB: "1024" # this value can be removed for environments with normal sized disks (100 GB or larger) #journalSizeMB: "1024" # this value can be removed for environments with normal sized disks (20 GB or larger) # 节点列表,使用k8s中节点名 nodes: - name: k8s1138026node devices: # specific devices to use for storage can be specified for each node - name: "vdb" config: # configuration can be specified at the node level which overrides the cluster level config storeType: bluestore - name: k8s1138027node devices: # specific devices to use for storage can be specified for each node - name: "vdb" config: # configuration can be specified at the node level which overrides the cluster level config storeType: bluestore - name: k8s1138031node devices: # specific devices to use for storage can be specified for each node - name: "vdb" config: # configuration can be specified at the node level which overrides the cluster level config storeType: bluestore - name: k8s1138032node devices: # specific devices to use for storage can be specified for each node - name: "vdb" config: # configuration can be specified at the node level which overrides the cluster level config storeType: bluestore placement: all: nodeAffinity: tolerations: mgr: nodeAffinity: tolerations: mon: nodeAffinity: tolerations: # 建议osd设置节点亲合性 osd: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: ceph-role operator: In values: - osd tolerations: kubectl apply -f rook-ceph-cluster.yaml查看结果:

[[email protected] ceph]# kubectl get pod -n rook-ceph -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES csi-cephfsplugin-5dthf 3/3 Running 0 20h 172.16.138.33 k8s1138033node <none> <none> csi-cephfsplugin-f2hwm 3/3 Running 3 20h 172.16.138.27 k8s1138027node <none> <none> csi-cephfsplugin-hggkk 3/3 Running 0 20h 172.16.138.26 k8s1138026node <none> <none> csi-cephfsplugin-pjh66 3/3 Running 0 20h 172.16.138.32 k8s1138032node <none> <none> csi-cephfsplugin-provisioner-78d9994b5d-9n4n7 4/4 Running 0 20h 10.244.2.80 k8s1138031node <none> <none> csi-cephfsplugin-provisioner-78d9994b5d-tc898 4/4 Running 0 20h 10.244.3.81 k8s1138032node <none> <none> csi-cephfsplugin-tgxsk 3/3 Running 0 20h 172.16.138.31 k8s1138031node <none> <none> csi-rbdplugin-22bp9 3/3 Running 0 20h 172.16.138.26 k8s1138026node <none> <none> csi-rbdplugin-hf44c 3/3 Running 0 20h 172.16.138.32 k8s1138032node <none> <none> csi-rbdplugin-hpx7f 3/3 Running 0 20h 172.16.138.33 k8s1138033node <none> <none> csi-rbdplugin-kvx7x 3/3 Running 3 20h 172.16.138.27 k8s1138027node <none> <none> csi-rbdplugin-provisioner-74d6966958-srvqs 5/5 Running 5 20h 10.244.1.111 k8s1138027node <none> <none> csi-rbdplugin-provisioner-74d6966958-vwmms 5/5 Running 0 20h 10.244.3.80 k8s1138032node <none> <none> csi-rbdplugin-tqt7b 3/3 Running 0 20h 172.16.138.31 k8s1138031node <none> <none> rook-ceph-mgr-a-855bf6985b-57vwp 1/1 Running 1 19h 10.244.1.108 k8s1138027node <none> <none> rook-ceph-mon-a-7894d78d65-2zqwq 1/1 Running 1 19h 10.244.1.110 k8s1138027node <none> <none> rook-ceph-mon-b-5bfc85976c-q5gdk 1/1 Running 0 19h 10.244.4.178 k8s1138033node <none> <none> rook-ceph-mon-c-7576dc5fbb-kj8rv 1/1 Running 0 19h 10.244.2.104 k8s1138031node <none> <none> rook-ceph-operator-5bd7d67784-5l5ss 1/1 Running 0 24h 10.244.2.13 k8s1138031node <none> <none> rook-ceph-osd-0-d9c5686c7-tfjh9 1/1 Running 0 19h 10.244.0.35 k8s1138026node <none> <none> rook-ceph-osd-1-9987ddd44-9hwvg 1/1 Running 0 19h 10.244.2.114 k8s1138031node <none> <none> rook-ceph-osd-2-f5df47f59-4zd8j 1/1 Running 1 19h 10.244.1.109 k8s1138027node <none> <none> rook-ceph-osd-3-5b7579d7dd-nfvgl 1/1 Running 0 19h 10.244.3.90 k8s1138032node <none> <none> rook-ceph-osd-prepare-k8s1138026node-cmk5j 0/1 Completed 0 19h 10.244.0.36 k8s1138026node <none> <none> rook-ceph-osd-prepare-k8s1138027node-nbm82 0/1 Completed 0 19h 10.244.1.103 k8s1138027node <none> <none> rook-ceph-osd-prepare-k8s1138031node-9gh87 0/1 Completed 0 19h 10.244.2.115 k8s1138031node <none> <none> rook-ceph-osd-prepare-k8s1138032node-nj7vm 0/1 Completed 0 19h 10.244.3.87 k8s1138032node <none> <none> rook-discover-4n25t 1/1 Running 0 25h 10.244.2.5 k8s1138031node <none> <none> rook-discover-76h87 1/1 Running 0 25h 10.244.0.25 k8s1138026node <none> <none> rook-discover-ghgnk 1/1 Running 0 25h 10.244.4.5 k8s1138033node <none> <none> rook-discover-slvx8 1/1 Running 0 25h 10.244.3.5 k8s1138032node <none> <none> rook-discover-tgb8v 0/1 Error 0 25h <none> k8s1138027node <none> <none> [[email protected] ceph]# kubectl get svc,ep -n rook-ceph NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/csi-cephfsplugin-metrics ClusterIP 10.96.36.5 <none> 8080/TCP,8081/TCP 20h service/csi-rbdplugin-metrics ClusterIP 10.96.252.208 <none> 8080/TCP,8081/TCP 20h service/rook-ceph-mgr ClusterIP 10.96.167.186 <none> 9283/TCP 19h service/rook-ceph-mgr-dashboard ClusterIP 10.96.148.18 <none> 7000/TCP 19h service/rook-ceph-mon-a ClusterIP 10.96.183.92 <none> 6789/TCP,3300/TCP 19h service/rook-ceph-mon-b ClusterIP 10.96.201.107 <none> 6789/TCP,3300/TCP 19h service/rook-ceph-mon-c ClusterIP 10.96.105.92 <none> 6789/TCP,3300/TCP 19h NAME ENDPOINTS AGE endpoints/ceph.rook.io-block <none> 25h endpoints/csi-cephfsplugin-metrics 10.244.2.80:9081,10.244.3.81:9081,172.16.138.26:9081 + 11 more... 20h endpoints/csi-rbdplugin-metrics 10.244.1.111:9090,10.244.3.80:9090,172.16.138.26:9090 + 11 more... 20h endpoints/rook-ceph-mgr 10.244.1.108:9283 19h endpoints/rook-ceph-mgr-dashboard 10.244.1.108:7000 19h endpoints/rook-ceph-mon-a 10.244.1.110:3300,10.244.1.110:6789 19h endpoints/rook-ceph-mon-b 10.244.4.178:3300,10.244.4.178:6789 19h endpoints/rook-ceph-mon-c 10.244.2.104:3300,10.244.2.104:6789 19h endpoints/rook.io-block <none> 25h 2.4 Rook toolbox验证ceph将Rook toolbox部署至kubernetes中,以下为部署yaml:

温馨提示: 本文由杰米博客推荐,转载请保留链接: https://www.jmwww.net/file/web/10292.html

- 上一篇:thinkphp+redis实现秒杀功能

- 下一篇:Xinetd超级守护进程